IN TODAY'S EDIT

⌛ Use Case |

Node Outof Diskerror Troubleshoot and Fix |

🚀 Top News |

📚️ Resources : |

Learn New Thing: Tutorial for Selenium automation testing tool lovers. |

Want to prepare for Interviews & Certifications |

Before we begin... a big thank you to Friend Support. |

Inviul is the multi niche learning platform. It covers various topics like Selenium, Appium,Cucumber, Java and many more. |

USE CASE

Node Outof Disk error Troubleshoot and Fix

The NodeOutOfDisk error occurs when a Kubernetes node runs out of available disk space. This issue can prevent pods from being scheduled or started on the affected node, as Kubernetes requires sufficient disk space for pod storage and other operations.

Possible Causes

Low Disk Space on the Node:

The disk is nearly full due to log files, container images, or persistent volume data.

Large or Excessive Log Files:

Applications or system processes generating large log files without proper log rotation.

Abandoned or Orphaned Containers:

Stale containers or images that were not cleaned up after use.

Mismanaged Persistent Volumes:

Persistent Volumes (PVs) occupying significant disk space without proper deletion or retention policies.

Improper Configuration of Disk Resource Requests:

Pods requesting excessive storage resources.

Node Misconfiguration:

Disk quotas or policies improperly configured, leading to unanticipated space usage.

High Temporary Storage Usage:

Pods using

/tmpor other ephemeral storage without cleanup.

Troubleshooting Steps

✅ Check Node Status:

kubectl describe node <node-name>

Look for OutOfDisk conditions under Conditions.

✅Inspect Disk Usage:

SSH into the affected node and use:

df -h

Identify which partition is full.

✅Analyze Pod Disk Usage:

Check the size of logs and data stored by pods:

du -sh /var/lib/kubelet/pods/*

✅Cleanup Stale Resources:

Remove unused container images:

docker image prune -a

Clean up orphaned pods:

kubectl get pods --all-namespaces | grep Terminating kubectl delete pod <pod-name> --grace-period=0 --force

✅Inspect Logs:

Review logs for excessive growth:

journalctl --disk-usage

Rotate logs if necessary.

✅Verify Volume Management:

Check Persistent Volume Claims (PVCs) and Persistent Volumes (PVs) for unneeded storage:

kubectl get pvc kubectl delete pvc <pvc-name>

✅Restart Kubelet:

If the node status does not update after cleanup:

systemctl restart kubelet

Preventive Approaches

Set Up Disk Monitoring:

Use tools like Prometheus and Grafana to monitor disk usage.

Set alerts to notify when disk usage exceeds a defined threshold.

Implement Log Rotation:

Configure log rotation for system and application logs (e.g., using

logrotate).

Regular Disk Cleanup:

Automate cleanup of unused images and containers using tools like

kube-image-cleanup.

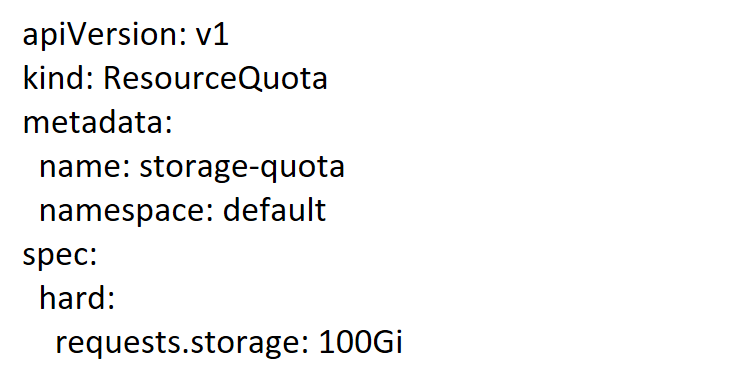

Use Resource Quotas:

Enforce storage limits for pods using resource quotas:

Capacity Planning:

Ensure nodes have sufficient disk capacity to accommodate workloads, with buffers for unexpected growth.

Optimize Application Storage:

Minimize the use of local disk for temporary storage and offload data to external storage solutions.